Scarlet Nebula

Cloud Management Toolkit in Java

maandag 9 mei 2011

Progress

dinsdag 3 mei 2011

Even more progress this week

- Add new firewalls

- Delete existing firewalls

- Add new rules to a firewall

- Remove rules from a firewall

ives ~/scarletnebula/Scarlet Nebula $ git diff --stat "@{one week ago}" HEAD | tail -n 1

125 files changed, 5245 insertions(+), 2536 deletions(-)

maandag 2 mei 2011

Another week's progress

- Created the CloudProvider properties screen, which for the moment consists only of an SSH key tab (maybe more will come, maybe not...)

- Made the "Create new Server"-server one-click instead of double click to open (Ruben's suggestion)

- When entering tags, if the user neglects to press ENTER the last time to add the tag to the taglist, the tag is automatically added.

- Wrote a KeyList component for displaying tags. This new one had to be written because the previous one was not able to display a radio button which would be selected when that key is the default key for that provider. Behind the screens this new Keylist is a JTable with a custom TableCellRenderer, CellEditor and ItemListener. Creating the KeyList also required work arrounds for several bugs in Swing related to displaying interactive components in a JTable.

- Created the New Key wizard, that creates a new SSH key and registers it with the CloudProvider.

- Finished the Import key wizard, which allows the user to pick a keyfile from file. Also made the part that loads the available CloudProvider keys asynchronous.

- Made it so when a connection to the server cannot be established, no graph is displayed in the server component and the "View statistics" and "Start terminal" options in the context menu are disabled.

- Changed the appearance of the Toolbar-style buttons in e.g. Server Properties (the ones next to the labels). These were previously weirdly styled JButtons, but even after hours and hours of work, which included reading a paper about Nimbus (the Look & Feel I'm using), they still caused alignment problems (JButtons in Nimbus have invisible Borders which screw up my form layout when they're present but on the other hand they have to be present or the contents of the button will be obscured by the actual button edge). Currently these buttons are simple JLabels with an easy MouseListener to listen for clicks (which fires all ActionListeners) and mouse hovers. This change should also improve compatibility for people who can't use Nimbus (probably due to them not running the Oracle JRE).

- Display the union of all selected servers their tags when displaying the properties of multiple servers.

- Fixed bug that allowed you to add the same tag twice to the taglist.

- Made a better Server size-selection slide in the Add Server Wizard. This slide knows the architecture you chose in the previous (Image) slide so it displays only relevant information. Information displayed per instance size includes number of CPU's, Memory and Hard drive size. I'm getting pretty good at writing ListCellRenderers...

- And some other minor things...

woensdag 6 april 2011

Asynchronous interface... the good, the bad and the ugly

- A small modal dialog window, possibly without controls containing only a progress bar. The way VLC does it for rebuilding font buffers.

- The Good: easy to implement, cancellable

- The Bad: Causes an extra level of nesting

- The Ugly: looks like crap, obscures part of the UI

- Change the cursor to a busy cursor and disable the table

- The Good: users are familiar with the busy cursor and know something is happening

- The Bad: the whole cursor thing in Swing sucks. The cursor only changes after the user moves his mouse; it's tricky to find all the different ways of leaving that screen, if one is forgetten the user will spend eternity with a busy cursor

- The Ugly: /

- Insert a progress-bar or some other status indication device into the GUI itself.

- The Good: easily recognizable, in my opinion the prettiest of all 3 options

- The Bad: Doesn't look perfect, not cancellable (although that's not strictly necessary, the user can type in a new search term)

- The Ugly: /

dinsdag 29 maart 2011

Tagging: a basic implementation

- Not all Cloud Providers support tagging

- Changing tags on a running server is impossible in most Cloud Providers

When linking a previously unlinked server, the cloudprovider this server resides in will be queried and the tags (if any) returned will be used by Scarlet Nebula.

The Dasein Tag Format

Dasein does not use a typical interpretation of tags, it instead defines a tag as a (key,value) tuple. This has two complications- A Scarlet Nebula tag consisting only of a value needs to be stored as key,value

- A Dasein tag consisting of a key, value pair needs to be stored as a value

The second problem is solved by converting (key, value) = (foo,bar) to foo:bar unless it's a Scarlet Nebula tag in which case it will be converted to just "bar".

donderdag 17 maart 2011

Planning

Two weeks from now (before 31st of March): Finish SSH Key wizards & Windows.

Three weeks from now (before 7th of April): Figure out a decent way to execute potentially timetaking requests asynchronously and at least partially adapt the GUI.

Four and five weeks from now (Easter Holidays): Implement the server statistics stuff. Finish asynchronising some operations. Try adding a dummy "Add a new server"-server to the serverlist (I don't know how possible this will be). File transfers? Driving multiple servers? That should be enough clairvoyance for today...

This week's progress

- When adding a new CloudProvider, the user's credentials are tested to confirm they are valid.

- Made a custom ListCellRenderer for my servers. Also made the code that auto-resizes. It's not perfect when resizing but I suspect this is because of a Swing bug.

- Started work on the SSH key wizards (yes, multiple...) and management Window.

dinsdag 8 maart 2011

Upgrading to Dasein Cloud API 2011-02

dinsdag 1 maart 2011

A Short and Unordered ToDo List

- Choice of machine image (incl architecture) when starting servers. I wasn't sure this would be possible with a standard Dasein, but after looking through the API docs, I found dasein.cloud.compute.MachineImageSupport which does what I want it to do. I'll have to make some kind of a table based GUI with filters for the machine image selection. I can then deduce the architecture type (x86 or x64) from the chosen machine image.

- Asynchronous GUI when performing networking operations. Since there's no way for me to know how long an HTTP request will take, I'll have to find some way to do this (either nothing special and "busy" cursors, or the clearer-but-harder-to-do progress bar (that doesn't indicate progress).

- Statistics for servers, incl defining a format, updating the serverlist's server icons to indicate a server state. More on this later.

- Loading server optimization (small optimization that could save a lot of time when starting Scarlet Nebula with many linked servers)

- Limited size queue for active SSH sessions (e.g. last 5 active ssh sessions stay open, when user attempts to open an ssh session to a server that's currently not connected to, LRU ssh session is automatically closed)

- Fix the resize while SSH terminal is open bug (drawing bug)

- Support for multiple servers, both for simultaneously sending ssh commandos to lots of servers, as for pauzing/starting/terminating/rebooting multiple servers (part of this code is already written)

vrijdag 26 november 2010

Starting an Amazon EC2 Server from scratch using Tim Kay's aws tools

Before we start, good luck and godspeed, this will be a long haul.

Installing and configuring the aws tools

First, download the tools. These can be found on http://timkay.com/aws/. Using these requires you to have the CURL libraries installed (use your packet manager to install, e.g.sudo apt-get install curl if you're on a Debian based linux like Ubuntu).

Get the tools like explained on the page linked to above. Installation is optional. Just make sure you're in the folder in which you downloaded the "aws" file when using the tools and don't forget to chmod u+x aws if you prefer not installing the tools. I won't be using an installed version in this tutorial so if you do install, replace all instances of ./aws with aws (this will make bash look for aws in your path instead of your current directory).

Don't forget to create a file in your home directory called .awssecret containing your access key id and secret access key id. This file looks like

5esf4s6e5f4s6e54f6se

qlekfjqselfkj561e6f51se+esjfkse

with the first line containing your api key and the second your api secret.

General notice

One very important thing to note, is that there isn't exactly "one" Amazon EC2. There are several, the one you should choose depends on your location. These are the so-called endpoints I've talked about before. If you're in the US, you can just use the ./aws without doing something extra and you'll be using the (default) US endpoint. If you're in Europe, you probably want to use the European endpoint. Doing this in Tim Kay's aws tools is done by giving the extra parameter--region=eu after every command. That's right, every single one.

If you're like me and forget to do this every other command, you can execute the command

echo "--region=eu" >> ~/.awsrc

exactly once. This will write the option to a file that's used as a set of default options for every aws parameters. This way you don't have to add the --region parameter to the end of every line and endpoints shouldn't bother you too much.

Creating a new SSH key

Don't confuse this key (the SSH key) with the API key you entered in the ~/.awssecret file. The key in the ~/.awssecret is the key you need when talking to the Amazon API, the key you'll generate here is the key you'll need to talk to your instances.Instead of giving you a password to connect to your EC2 instance, Amazon allows (well, forces) you to use a more secure RSA key to setup an ssh connection to your instance(s).

Generating this key is easy. Execute the command

./aws add-keypair default > defaultawskey.key

To generate a key named "default" (when talking about this key to Amazon, you'll refer to it as "default" or whatever you named the key) and place your private key in a file called "defaultawskey.key" in your current directory. This is a normal run-of-the-mill SSH key. SSH won't accept your key if you give it like this, by default the key file is probably readable by everyone (a security problem which annoys ssh). You can fix the problem by only allowing yourself (so by not allowing other users on your local machine access to this key file) access to the file with

chmod 600 defaultawskey.key

Allowing SSH access to your server by configuring a group

If you want your server to do anything, you'll probably need it to execute commands. When starting a new instance, you'll specify a security group. This group will tell the server's firewall what ports to open.Practically, you want to open a communication channel on TCP port 22 (SSH traffic). You can do this by either modifying an existing security group (one called "default" is created for you by default). Suppose we want to create a new group called "sshonly" and only allow ssh traffic in this group.

Making a new group called sshonly is done with the command

./aws addgrp sshonly -d "Only ssh traffic"

The text in the -d argument is obligatory and contains a short description of your group.

By default, this group is empty (it does not contain any rules). You can confirm this by executing

./aws describe-groups

The output is too wide to display here, but you'll notice nothing is written in the righmost few columns on the "sshonly" row. This is because there are no rules for this group.

Once this new group is created, a new rule can be added to it. This is done with the authorize command.

./aws authorize sshonly -P tcp -p 22 -s 0.0.0.0/0

./aws describe-groups

Note the new table containing the columns

ipProtocol | fromPort | toPort | groups

tcp | 22 | 22 | item= userId=x cidrIp=0.0.0.0/0

A good sign indeed.

Finding an AMI

An AMI, or Amazon Machine Instance, identifies an image you can use to base your instance on. You can request all possible AMI's by executing the command

./aws describe-images > amis.txt

This command writes all available ami's to a file called amis.txt in the current directory. Executing this command can and will take a while (it's a very big list). You're best of grepping through it. When you find an image you like (a simple i386 image with a recent ubuntu version on will probably do fine), take note of the string in the first column (ami-xxxxxxxx), you'll be needing this. The one I chose was ami-d80a3fac (an Ubuntu 10.10 server image).

Starting a new instance

Actually starting a new instance is done by executing

./aws run-instances ami-d80a3fac -g sshonly -k default

This will start a new instance with ami "ami-d80a3fac", security group "sshonly" and the key named "default" (remember, this was the one for which we stored our private key in the file defaultawskey.key). After pressing enter, the new instance will be in status "Pending". This means Amazon is getting the machine ready for usage. Wait about a minute and run the command

ives ~/aws $ ./aws describe-instances --simple

i-44e3d333 running ec2-79-125-77-241.eu-west-1.compute.amazonaws.com

Keep running the describe-instances command until a DNS address appears and the state is "running" (not "pending"). Your new instance can be reached on the address given in the describe-instances output, e.g. "ec2-79-125-77-241.eu-west-1.compute.amazonaws.com" and the instance is named "i-44e3d333".

Executing commands on your instance

We're finally here! We now have SSH access to our running instance. Give the command

ssh -i defaultawskey.key root@ec2-79-125-77-241.eu-west-1.compute.amazonaws.com

with "defaultawskey.key" being the file to which your RSA key was written in the section "Creating a new SSH key" and "ec2-79-125-77-241.eu-west-1.compute.amazonaws.com" being the address your instance can be reached on, given by the describe-instances output.

Doing this should give you something like

RSA key fingerprint is 58:ff:ff:42:f1:8e:46:86:71:ef:a6:66:d5:43:4a:d4.

Are you sure you want to continue connecting (yes/no)?

Enter "yes", press return and with some luck you can enjoy your brand new instance.

If you get the error

Please login as the ubuntu user rather than root user.

Login as the user called "ubuntu" instead of "root", like this:

ssh -i defaultawskey.key ubuntu@ec2-79-125-77-241.eu-west-1.compute.amazonaws.com

And everything should be fine. If you got to this point, congratulations, I know it wasn't easy! Anyhow, read on and don't forget to terminate your instances! They're not free.

Terminating instances

./aws describe-instances --simple

The column on the left contains the instance number. Pass this number to ./aws terminate-instance like this:

./aws terminate-instances i-44e3d333 with "i-44e3d333" being the instance number "describe-instances" gave you. After entering this command, the describe instances listing will look like this:

ives ~/aws $ ./aws describe-instances --simple

i-44e3d333 terminated

The instance will keep its "terminated" status for a few hours and will then disappear.

Conclusion

This post of Steve Yegge-an proportions (more than 1500 words) should help you when you're trying to start your first instance. It was long and it was hard, but this was probably the hardest part. The next time you're starting new instances, you won't have to repeat the first few steps (you won't need to create a new ssh key (use the existing one), create a new group, add rules for that group, ..., just find a good AMI, execute the run-instances command and you should be on your way).woensdag 24 november 2010

An updated design

- Keeping track of endpoints

- Saving and loading cloudprovider specific data, like api keys and stuff like that

Initially I had both an InstanceManager and a CloudProvider, when I noticed almost every method of InstanceManager took a CloudProvider as its first parameter -- resembling a primitive way to implement object orientation. This of course was my cue to merge them together.

As a side note: the implementation of this is coming along relatively nicely. Using Tim Kay's aws tools I succesfully booted a server (I'm not quite at that point in my own code yet but I did need to see a server in action to test the server listing code).

The booting of my first server and connecting to it wasn't exactly pleasant. I'm sure this is a piece of cake in Amazon's webinterface, but the same can't be said from the console interface. Steps for doing this included

- Finding out how to generate an ssh key

- Finding out where to look up AMI id's (Amazon Machine Instances, server templates)

- Finding out what a user group is and why I need one

- Finding out SSH isn't allowed through the firewall by default

- Finding out how to add a rule to a group that allows SSH traffic through the firewall

- Finding out how to boot a new instance from an AMI that allows access through SSH with the previously generated key and runs in the group that passes

- Finding out what login information to use and how to pass a key to ssh on the fly

So yeah, that took me a while...

zaterdag 20 november 2010

Amazon EC2, Dasein and Endpoints

woensdag 17 november 2010

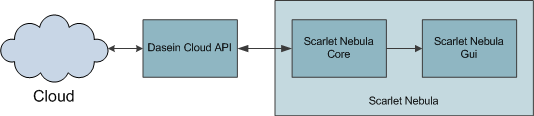

Initial Design of the Scarlet Nebula Core

The Scarlet Nebula Core

It will look (probably vaguely) like this:

dinsdag 9 november 2010

Early Schedule

Wednesday, November 10th

- Making this schedule

- Writing out an initial design

Wednesday, November 17th

- Compiling the Dasein Cloud API

- Listing servers

Wednesday, December 1st

- Starting and stopping instances

Wednesday, December 8th

- Remotely executing commands

That's enough clearvoyance for now...

dinsdag 2 november 2010

Graphics libraries for the GUI

- Abstract Window Toolkit: Too basic.

- Swing: Looks nice, supports relatively complicated constructs like treeviews without problems, Model-view-controller based

- Qt Jambi: Not very well known in the Java community (relatively new), still a Java interface to a C++ library built on C code

zaterdag 30 oktober 2010

The Dasein Cloud API

Support

The DCA supports a wide range of cloud providers. As of June 2010 this list includes the following public clouds:- Amazon Web Services

- Azure

- GoGrid

- Rackspace

- ReliaCloud

- Terremark

- Google Storage

- Cloud.com

- Eucalyptus

- vSphere

new providers can be added, should be more than adequate.

Architecture

One of the major advantages of the DCA is that it does not sufferfrom an ailment that frequently plagues API's: only implementing the lowest

common denominator of everything that's available. The DCA supports a wide

variety of clouds, ranging from a simple public storage service to private

computing clouds and does so in a relatively clean way.

Features

The DCA's features include the concepts of- Servers

- Server Images

- Distributions (in a CDN context)

- Volumes

- Snapshots

- Firewalls

- IP Addresses

- Relation Databases

- Load Balancers

- Geographical Regions

- Datacenters

- Cloud files

Furthermore, The DCA also supports the VMWare vCloud API.

Conclusion

The DCA should be adequate for pretty much all of my requirements. If afunctionality gap does appear, the DCA's code looks well written and should be

easily extendable.

zondag 24 oktober 2010

Introduction & Preliminary Feature Set

Introduction

Scarlet Nebula is going to be a Java based software program mainly for

managing VM (Virtual Machine) based IaaS (Infrastructure as a Service) clouds.

Features

- Mixed clouds (communicating servers from different cloud providers, possibly even private clouds)

- A tree based navigational structure for servers. This will allow for grouping of servers and scaling from a single to thousands of servers.

- Statistical tracking of the amount of time servers are online

- Graphs for tracking stuff like CPU usage and other useful data on the "health" of a server.

- Extensive scripting that allows for starting new servers with a single click.

- Triggers that can send alerts in the form of emails, execute scripts,...

And of course the obvious ones like

- Starting new servers

- Executing commands on one or more servers

- Transferring files to one or more servers

- Shutting servers down

- Auto-scaling

Under the motto "all code is better than no code" the Dasein Cloud API seems somewhat interesting. Although the entire Dasein documentation seems to consist of 1 short page of API reference and less than a handful of short code examples, code quality seems to be okay and it is still being actively developed.